In accordance with the European Union’s General Data Protection Regulation (GDPR), we are committed to safeguarding and ensuring your control over your personal data. By clicking “Accept All” you are permitting us to use cookies to enhance your browsing experience, assist us in analyzing website performance and usage, and deliver relevant marketing content. You can manage your cookie settings below. By clicking “Confirm” you are agreeing to the current settings.

Feasibility Study on Energy Efficiency of AI in 1U Server with Multi-heat Source Cooling Fan

■Research Background

As technology grows, so does the amount of data that needs to be stored, transmitted, computed, etc. To facilitate management and scale-up, various high-density server facilities are installed as data centers. With the accelerated development of the Internet of Things, cloud storage, big data, artificial intelligence and 5G, the demand for data centers as a platform for data and information processing is also growing. The data center generates a high density of waste heat during operation, which is mainly produced by the several servers in each cabinet. As a result, failure to effectively cool the system can easily lead to overheating and improper functioning, thus increasing the dependence and complexity of server systems on the stability of server performance in data centers, reliability in high-speed computing, security of information transmission and energy loss in relation to overall costs.

According to the 2014 data center power usage ratio in the US [1], 40% of the energy loss was attributed to the heat generated by the cooling servers. Reducing the energy consumption of cooling systems has therefore become an important study in recent years, allowing for more efficient use of energy as well as significant savings in energy costs. This shows that in the future, data centers will need to balance the need for server performance with the need to cut energy costs.

■Research Methodology

1.Enhanced Deep Learning

This study adopts the gradient descent algorithm for deep deterministic policies in enhanced deep learning. The algorithm is based on a Markov decision process in which the environment engages with the agent repeatedly, and each interaction is recorded and stored in a database, and after accumulating a certain amount of data, the data in the database is randomly extracted for training. There are two neural networks in the model training algorithm process, the target and evaluation neural networks. The evaluation neural network updates its parameters once for each loss gradient, whereas the target neural network only updates slowly for each computation, which can be regarded as a fixed reference point compared to the evaluation neural network, helping the evaluation neural network to achieve more stable convergence in computation. Finally, the actor neural network parameters are updated to optimize the actor neural network to help the agent find a suitable point of operation for decision making in different situations.

In order for the agent to understand the internal conditions of the system during its engagement with the environment, it needs to be aware of the current situation in the server through a number of index parameters. There are therefore three main categories of indicators, namely heat source characteristics, ambient and internal configuration, and fan configuration, all of which have several parameters indices that serve as a reference for observing the characteristics, and in this study the operating value is represented by the current fan speed duty cycle as the output action.

The agent's engagement with the environment generates a reward value, which is given based on the outcome of the engagement and serves as an important reference for training the critic neural network, which in turn influences the agent's final behavior. Therefore, for effective heat dissipation control, the fin efficiency and heat transfer area, which affect the heat dissipation in this study, are fixed in the heat dissipation design and cannot be altered for subsequent control. The heat transfer coefficient is affected by the flow rate driven by the fan, and the fan law shows that the fan speed is a major factor affecting the power consumption of the system, and is also the most critical indicator of energy consumption. On the other hand, the effective temperature difference is affected by the fan speed. In this study, it is necessary to control the temperature of the heat source within the normal operating range to avoid overheating and damage to the components and to improve the energy efficiency of the server by giving an incentive value to the agent to evaluate the action.

2. Server Transient Environment Simulation

Figure 1 Actual server configuration

Fig. 1 shows the servers available in the market, and it can be seen that the server configuration is complex and the space is confined, so this study has simplified the server heat transfer model. Firstly, this study assumes that the server is a single inlet/outlet channel with no additional pressure differential due to external flow fields, where the fan static pressure is equal to the total channel pressure drop and the developmental flow effects of forced convection are ignored. The cooling behavior of the heat sink relies solely on the cooling of the incoming air in the frontal area of the inlet, ignoring the cooling effect in the surrounding side channels, and finally the incoming air does not leak into the surrounding side channels as it passes through the heat sink.

In addition to the above assumptions, the heat source distribution rules in the server have been simplified. It is assumed that the distribution of heat source modules in the server is divided into different rows according to the direction of flow, with each row being linked in series to form an upstream and downstream, with the downstream inlet inheriting the upstream outlet fluid properties; between the same rows the heat sinks are arranged side by side to form multiple parallel channels. With the above heat sink distribution assumptions, the space in the server is cut into a number of hypothetical channels and only one heat sink can exist in a single flow channel, and the heat sink cannot fully occupy the runner cross-section to create a geometrical arrangement for the bypass. This geometric model can approximate to the research model by Jonsson [2] by neglecting the difference in the profile of the runner sides, and therefore describes the performance of the heat sink in terms of its pressure drop and the Nusselt number.

■Preliminary Results

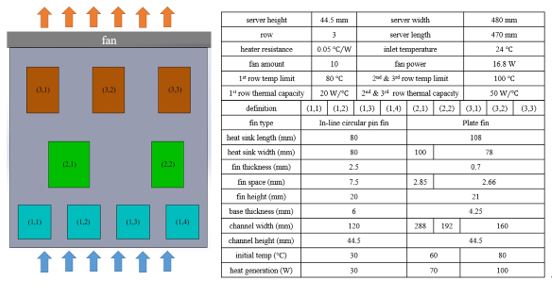

A preliminary governing control model has been completed and the range of 1U server environment configuration parameters applied to the model in this study are shown in Table 1 below. Fig. 1 illustrates the environmental parameters and configuration of the server for the subsequent simulation, with each numbered block being a heat source module containing a heat source and a heat sink, while Fig. 2 presents the performance of the fan used for the simulation.

Compare the results of traditional switch control methods and algorithm control.

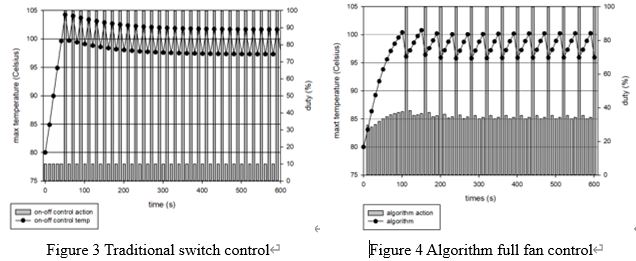

The simulation results in Fig. 2 and Fig. 3 show that although these two methods can effectively control the temperature, the energy consumption of the traditional control method is 109% of that of the algorithm control. The algorithm control can control the maximum heat source temperature near its upper limit, use the maximum effective heat transfer temperature difference to dissipate heat, and reduce the use of fans to dissipate heat as much as possible. It can be found that the algorithm is used to control and effectively help achieve greater energy saving effect.

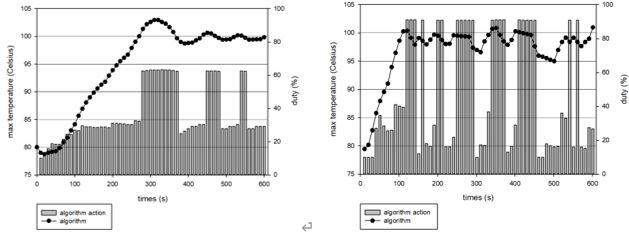

Although the control by the algorithms has been effective in boosting energy efficiency, there is still room for improvement in energy conservation, so the previous framework was continued and the fan control method was changed to compare temperature control and energy efficiency. Fig. 4 shows the control result by changing only one fan at a time, which is less able to give immediate feedback to the temperature at the beginning, but the temperature control is more stable in the later stages with a smoother fluctuation. In Fig. 5, the fans are distributed to several zones, with several fans in each zone. The number of fans in each zone is changed on a zone-by-zone basis, which is more immediate in terms of temperature control. However, as more fans are changed at a time, the impact on the overall flow rate increases and the temperature oscillates more sharply, resulting in relatively poor energy savings.

■Conclusions

By using a database of big data for model training, we are able to reduce the time cost of designing server configurations, and we are also able to give more appropriate actions according to different operating conditions and lower the energy consumption of the server in terms of heat dissipation. The results of the current study indicate that the algorithm can help us to control the temperature of the heat source to avoid overheating and to dissipate the heat with the maximum effective temperature difference, and then to further dissipate the heat with the fan to reduce the energy consumption of the fan and enhance the overall energy saving of the system. Further optimization of the intelligent control will be carried out and extended to cabinet and server room applications.

■References

[1] 2020, "How Much Energy Do Data Centers Really Use?," Energy Innovation: Policy and Technology LLC. https://energyinnovation.org/2020/03/17/how-much-energy-do-data-centers-really-use/

[2] H. Jonsson and B. Moshfegh., 2001, "Modeling of the thermal and hydraulic performance of plate fin, strip fin, and pin fin heat sinks-influence of flow bypass," IEEE TRANSACTIONS ON COMPONENTS AND PACKAGING TECHNOLOGIES, VOL. 24, NO. 2, JUNE 2001, pp. 142-149.

Author

Professor Wang Qichuan

Expertise | Electronic heat dissipation,Cloud computing energy management,Desalination,Development and application of non-traditional fluid machinery,

Refrigeration and air conditioning,Supercritical fluid systems and heat exchangers (soft and hardware),

LED heat dissipation,Micro-channel heat flow design (single and two-phase fluid applications)